Introduction to the Decision Tree Algorithm

The Decision Tree algorithm is a cornerstone of machine learning. It offers a simple yet powerful method for making predictions. It mimics human decision-making. Which provides an interpretable structure that can classify data or predict continuous outcomes. As we explore its principles and predictive mechanisms, you’ll see why it remains a vital tool in data science.

What is a Decision Tree Algorithm?

A decision tree algorithm is a supervised learning technique. We use it for both classification and regression tasks.

Key Features:

- Easy to visualise and interpret.

- Handles both numerical and categorical data.

- Supports complex decision-making with clear outcomes.

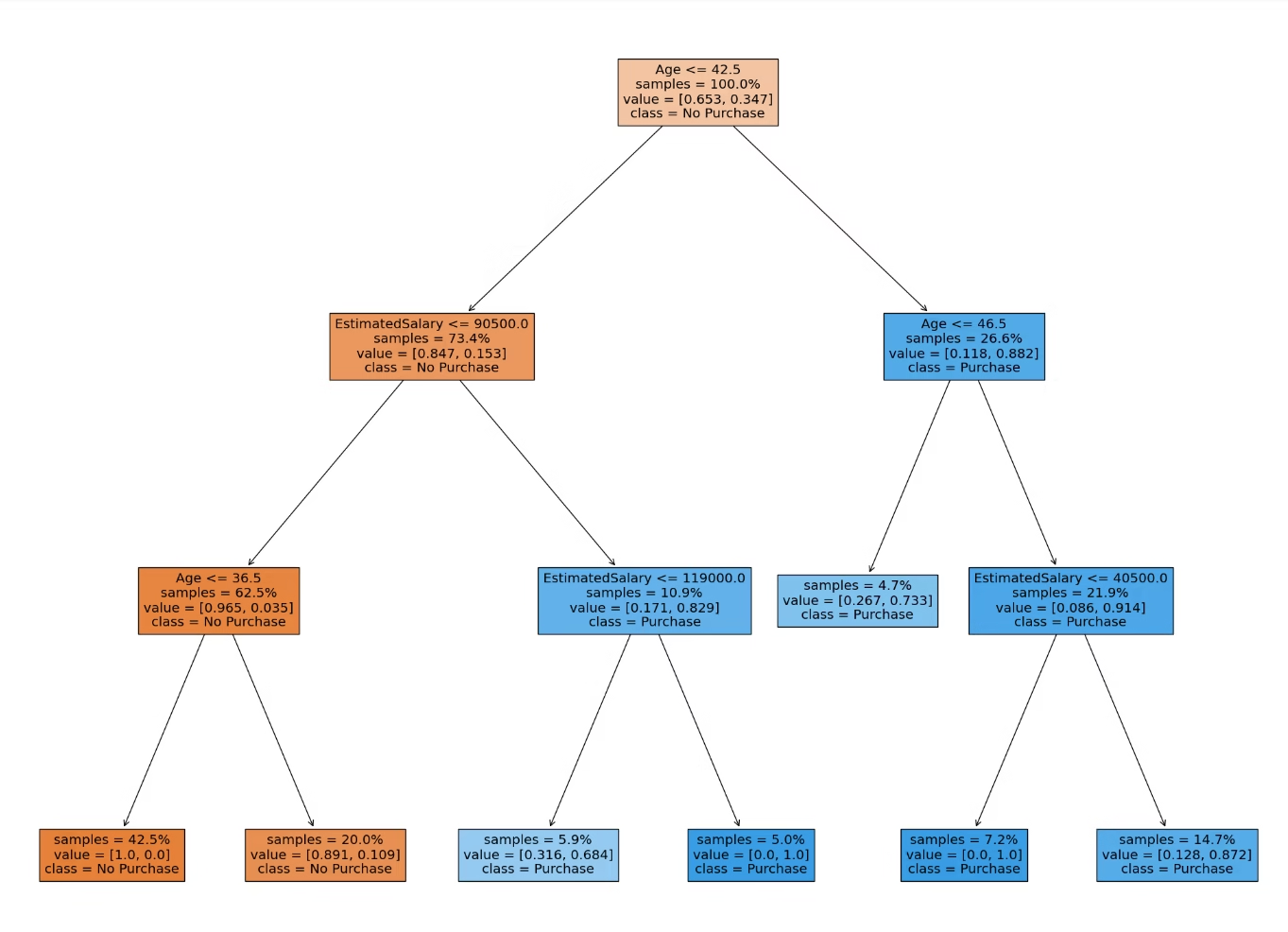

Structure of a Decision Tree

A decision tree comprises:

- Root Node: The starting point which represents the entire dataset.

- Internal Nodes: Intermediate splits based on feature values.

- Leaf Nodes: Final predictions or decisions.

Each split aims to maximise homogeneity within subsets, ensuring the tree efficiently separates data.

The Working Principle of Decision Tree

The decision tree algorithm operates on a principle of recursive partitioning. This divides the data into subsets based on feature thresholds. This ensures accurate predictions by grouping similar data points.

Information Gain and Entropy

Entropy measures the impurity of a dataset. While Information Gain quantifies the improvement in purity after a split.

The formula for entropy is:

[latex]Entropy(S) = -\sum_{i=1}^{c} p_i \log_2(p_i)[/latex]

Here, [latex]p_i[/latex] is the probability of class [latex] i[/latex]. Splits are chosen to maximise Information Gain.

Gini Impurity

Another popular metric is the Gini Index, which evaluates a split’s quality. A lower Gini Index indicates better split quality.

[latex]Gini(S) = 1 – \sum_{i=1}^{c} p_i^2[/latex]

Both metrics help identify the optimal feature for data partitioning.

Steps in Decision Tree Construction

Building a Decision Tree involves:

- Data Preprocessing: Ensuring data quality and handling missing values.

- Splitting the Dataset: Using metrics like Entropy or Gini Index.

- Pruning the Tree: Avoiding overfitting by removing insignificant branches.

How Decision Tree Algorithm Makes Predictions

Prediction follows a path from the root to a leaf node, based on feature values. The tree evaluates each feature sequentially, ensuring predictions align with training patterns.

Classification Example

Suppose we have a dataset of fruits with features like colour and size. A Decision Tree classifies the fruit based on these attributes, predicting categories like “Apple” or “Orange.”

Regression Example

For regression, the algorithm predicts continuous values. For instance, it might estimate housing prices based on features like location, size, and age.

Strengths of Decision Tree Algorithms

- Interpretability: Visual trees make understanding easy.

- Non-linear Data Handling: It can capture complex relationships.

Weaknesses and Limitations

- Overfitting: Trees may become too specific to training data.

- Sensitivity to Noise: Noisy data can degrade performance.

Techniques to Improve Decision Tree Performance

- Pruning Methods: Eliminate unnecessary splits.

- Ensemble Models: Combine multiple trees for better predictions, as in Random Forest.

Random Forest vs. Decision Tree

Random Forest is an ensemble method. Which reduces overfitting by averaging predictions from multiple trees. While slower, it often yields superior results.

Applications of the Decision Tree Algorithm

- Healthcare: Diagnosing diseases based on symptoms.

- Marketing: Predicting customer behaviour for targeted campaigns.

- Finance: Assessing credit risk.

Tools and Libraries for Decision Tree Implementation

- Python (Scikit-learn): Offers a robust DecisionTreeClassifier and DecisionTreeRegressor. Here you can learn more about Scikit-learn.

- R Programming: This is a popular language you can use for statistical modelling. Find out more about R here.

- TensorFlow: For advanced tree implementations. You can find more TensorFlow here.

Future Trends in Decision Tree Algorithms

Emerging trends include hybrid models that combine decision trees with deep learning and their role in explainable AI.

FAQs About Decision Tree Algorithms

- What is the main advantage of a decision tree?

Its simplicity and interpretability make it accessible to both beginners and experts. - How do I prevent overfitting in a decision tree?

Consider using pruning techniques or switching to ensemble methods such as Random Forests. - Can decision trees handle missing data?

Yes, but I recommend preprocessing for better performance. - What’s the difference between classification and regression trees?

Classification trees predict categories; regression trees predict numerical values. - Are decision trees suitable for large datasets?

They work well but can be slow; ensemble methods are better for scalability. - Is Random Forest always better than Decision Trees?

It is not always the case. For small datasets, decision trees might suffice.

Conclusion

The Decision Tree algorithm exemplifies the balance between simplicity and power in machine learning. When you understand its working principle and prediction mechanism. You can leverage it for diverse applications. Explore its tools and techniques to enhance your predictive models today! Contact me if you wish to know more about decision tree algorithms.